Warning: Speculative physics bullshit by a non-physicist

Nature just published a short commentary on a possible quantum theoretical foundation of space time. In short, it suggests that spacetime is not the reason why particles get entangled with each other (i.e. they got close enough to influence each other), but that it is the other way around: spacetime is the emergent result of the entanglement of particles. The case is not strong yet, but it would have huge implications for a grand unified theory, and possibly also for the relationship between the quantum world and computation.

The idea, as far as I understand it (and please correct me) is the following: Usually, when we think about the universe, we start by assuming a spacetime substrate, i.e. everything that happens does so in space and time, and these form an n-dimensional manifold (imagine it as a multidimensional grid that describes a curved space, with the curvature supplied by gravity). Every event can be indexed by its position in time and space. In classical physics, every particle has a well-defined position. Unfortunately, classical physics has been proven to be wrong, and quantum mechanics makes this more complex, because now every particle can only be characterized by constraints on a range of of positions. The position only gets actualized when something observes the particle, which basically means that it gets entangled with it; before the observation, the particle's position gets "smeared" into a probability distribution.

Quantum mechanics has some interesting implications. First of all, the probability distribution does not simply mean that we do not really know where the particle is. It means that the particle is somehow in all of these positions at once (and since the particle cannot be "diluted", it is also in no position at all). Because the particle is "in all positions at once", it can tunnel through boundaries, which is why transistors work: if the electrons are not observed too hard, their positions become a bit "mushy", and they can tunnel through a layer of insulation. Unobserved particles behave differently from those that are observed. This feature also applies to other properties besides position, such as electron spin.

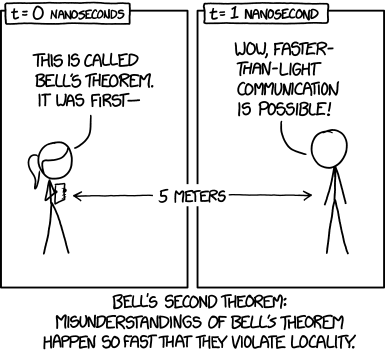

If two particles are in close proximity, they influence each other, and become 'entangled'. For instance, a pair of electrons sharing an atom orbital will have opposing spins. If I measure one of them, I will also know the spin of the other one, and consequently, the other electron will behave differently with respect to that property. This "spooky action at a distance" annoyed Einstein, because it happens instantly, instead of spreading at the speed of light. Sadly, we probably cannot use it to transmit information faster than the speed of light by breaking an electron pair, carefully not observing them and giving one of the electrons to a friend before he moves to Alpha Centauri. If we observe our electron, we still need to inform our friend about the observation before he will notice a difference.

(Source: xkcd)

From an information theoretic perspective, entanglement means that the two entangled entities share bits. The big computer that runs the universe does not implement a classical physics engine, where every object is constrained to a particular position and has its very own data structure stored at an index with that position, but some information can appear in two or more positions at once. The electron of our friend at Alpha Centauri literally shares a bit with ours. The entanglement of the two particles amounts to some kind of "wormhole" that punches right through spacetime and connects Earth with Alpha Centauri. Observation is an act of entanglement, too: by observing the spin of an electron, the observer gets entangled with it, and thereby with everything else the electron shares state.

The first implication of quantum mechanics: that unobserved states are superpositional, i.e. in all possible states at once, makes it very expensive to calculate the quantum world on a classical computer. Quantum computation is not somehow more powerful than Turing computation: a Turing machine has infinite amounts of memory and processing time at its disposal, so it can run an arbitrarily large quantum world just fine. Unfortunately, our university does not own an infinite Turing machine, so all models of quantum processes have to be run on classical computers, and if we make the quantum system larger, then we need to add new computers much faster to our data center than we add particles to the simulated quantum world. A computer that can simulate all quantum processes in a cubic millimeter of space won't fit into our solar system.

The second implication of quantum mechanics, non-locality, is confusing for people that would like to believe that the location of things is ontologically real, but it does not bother computer scientists like me that think about things as information that has to be computed by the universe. Non-locality simply means that the programmer of the Minecraft world that you and me inhabit sometimes uses the same data to code for objects that appear at different coordinates. It saves a lot of memory, I guess.

But most entanglement is not confusing, because it simply happens to objects that are in close proximity in spacetime. They exchange forces, which means that they influence each other, and consequently begin to share some of their state. Entanglement can be seen as a function of spacetime.

According to Mark van Raamsdonk (the physicist featured in the Nature commentary), we should consider the opposite interpretation. What if entanglement does not result from spacetime, but spacetime is an emergent result of entanglement? To use a metaphor, for the programmer of a Minecraft universe, it means that we throw away our current codebase, which describes everything with a 3d matrix, and diminishing relations between neighboring blocks, depending on their distance. Our new codebase only uses a set of blocks and their interactive relations with other blocks. The appearance of a world that is neatly organized into a space happens because some of these relations will be stronger and others weaker. Proximity is the result of entanglement, not the other way around. Can this work for our physical universe, too?

According to van Raamsdonk, it might, and he uses an insight by Juan Maldacena from 1997 to argue for it. Maldacena used a dramatically simplified model of a relativistic spacetime, the infinite three dimensional Anti-de Sitter space, which contains particles and has gravity, and compared it to a two-dimensional quantum field made from entangled particles that enclose an infinite universe and does not use gravity (a conformal field theory). Maldacena could show that the quantum field, which can be represented as a tensor network, is completely equivalent to the Anti-de Sitter space. We can perform all computations that give rise to whatever happens in the gravitational spacetime model using the much simpler formulation of the tensor network. The entangled quantum field turns out to be just a different representation of the spacetime universe.

The mathematical equivalence between toy models of a relativistic universe and a quantum universe does of course not mean that we have a grand unified theory just yet. Van Raamsdonk has begun to show equivalences beyond Maldacena's work (if we reduce the entanglement, then the spacetime continuum comes apart), and the next years might become quite exciting.

I am struck by the elegant simplicity of the idea. It turns Einstein's universe inside out: the topology and curvature of spacetime is no longer the substrate for local interaction between particles. Instead, particles have no "real" place, they are strictly just information that can be represented as tensors. Particles may share properties, which means that they are entangled, and some of these entangled properties result in what appears to be spacetime to us. Computationally, this makes a huge difference in how we calculate our universe: we start no longer out with a giant matrix that stores all particles at the indices of their position in spacetime. Spacetime is an emergent result of the calculations.

With respect to non-locality, this makes a lot of sense, because we already gave up on local realism when we were forced to accept Bell's inequality, and effectively allowed different fields in the universe matrix to be pointers to the same value. We can give up on the fiction of the presupposed matrix completely, if we can show that we can compute relative positions as a function of the tensors that describe entangled particles. Some features will not translate into positions, and these non-positional features will look like "spooky action at a distance" to the inhabitants of the resulting universe.

An even more fascinating (and outlandish!) consequence might result in how we compute superpositions. For example, a photon that travels through spacetime without interactions will neither change its state (because no relativistic time passes for that particle itself), and will manifest itself as a particle when hitting an observer, but will behave as a wave during the journey. This makes the calculation of the trajectory of the photon expensive, because it behaves as if it is in many positions at once, even though it eventually manifests only in one. But in the inverted perspective, this is not necessary, because a definite spacetime position does not exist with respect to each particle! There is absolutely no need to map the position of the photon to all of the indices of some grand matrix that spans the distance between sun and observer, and to update all of the Planck length matrix cells through which the probability wave "travels". The photon is never "smeared" or spread through spacetime, this is entirely an artifact of it not having an exact position. If the universe does not maintain its contents in a spacetime matrix, i.e. does not have to index everything with an absolute position, it will only have to update the changes in entanglement properties.

The currently high computational cost of quantum mechanics may be entirely due to our stubborn insistence on filling a spacetime matrix with values that happen to be just virtual.

The currently high computational cost of quantum mechanics may be entirely due to our stubborn insistence on filling a spacetime matrix with values that happen to be just virtual.

If my wild conjecture was right, it might mean that physicists will replace much of the codebase they built in the 20th century. Gone will be a basic assumptions that has been around at least since the Greek philosopher Plato, who maintained that "space is that in which things come to be". Space is then not a substrate that we inhabit, but simply be what entanglement looks like to the inhabitants of a tensor network.

No comments:

Post a Comment